Edition 12: AppSec Primer - How SAST tools work?

2nd in a 4-part primer on Static Application Security Testing (SAST). This edition gives you an overview of what SAST tools look like under the hood.

In part 1 of the primer, we spoke about what SAST is and why it’s needed. In this edition, we dig deeper to understand how the magic happens. Each SAST tool works slightly differently. This edition focuses on broad stages that most SAST tools go through, making a trade-off on simplicity over precision. If you are trying to be a smart user of SAST tools, this edition will help you. If you are trying to build a SAST tool, you should look elsewhere (good starting point).

How does SAST work?

SAST tools take code as input and provide a list of security defects as output. Under the hood, they go through 3 distinct phases: model code, run the rule engine and generate reports. In the next few paragraphs, we will dive deeper into each phase.

Phase 1: Model code

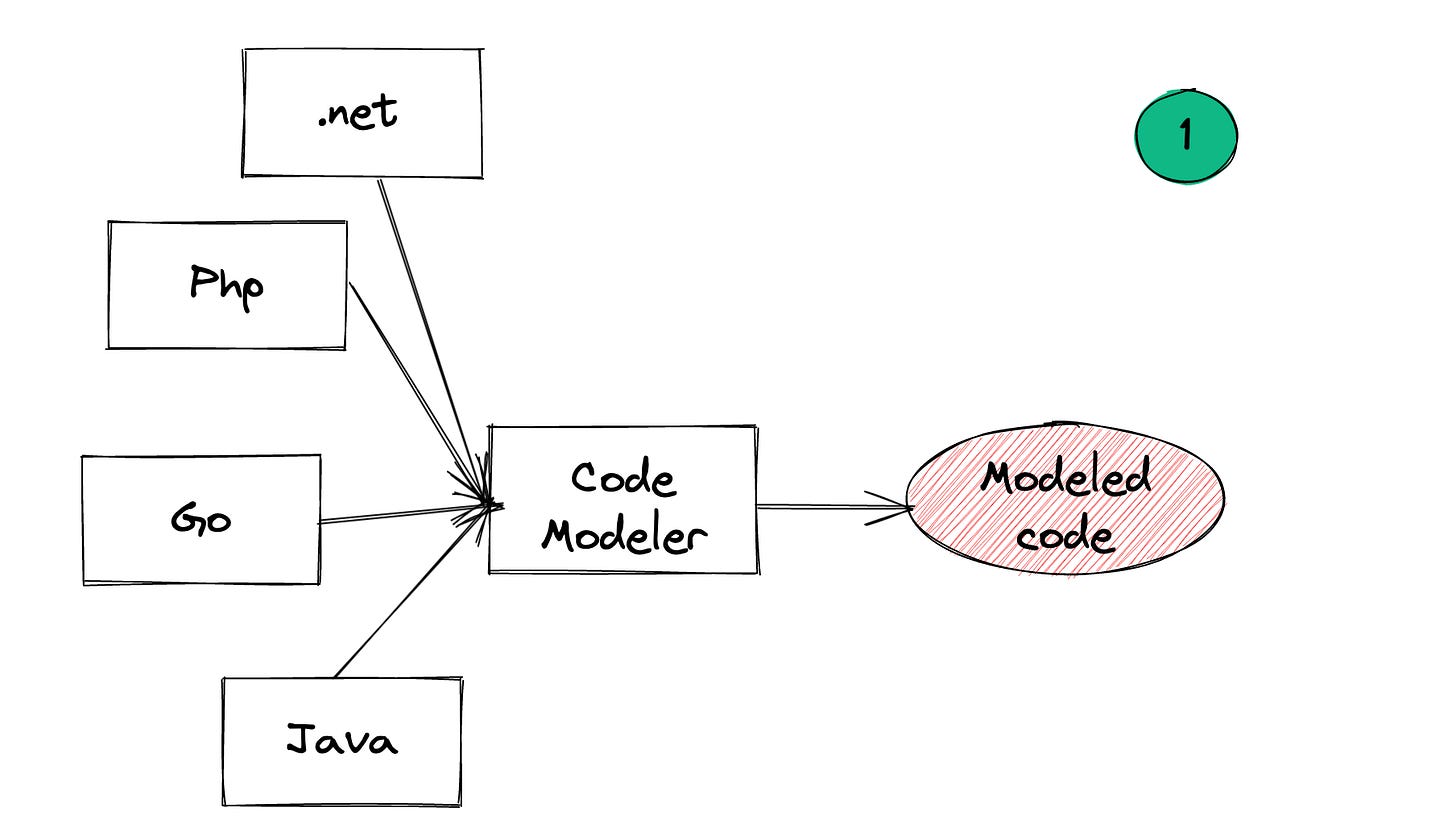

Most SAST tools support multiple programming languages (exceptions exist. e.g.: GoSec). Given this, there is value in getting all code (irrespective of language) to a common format, against which security rules can be tested (i.e. phase 2). Phase 1 focuses on getting to that “common format”.

The most popular format is an Abstract Syntax Tree (AST). ASTs make it easy to query code, which in-turn helps analyze it for security defects. However tools like Fortify (which is the OG of SAST tools) translate source code to a custom format. Depending on the programming language, the Code Modeler may include multiple sub-phases.

To put it another way, the goal of this phase is to provide an common input format to Phase 2. In some ways, this phase has little to do with security. The goal is to build an fast and accurate modeler.

Phase 2: Running rules

We now come to the “Security” part of SAST. The rule engine of SAST tools checks the modeled code (output from Phase 1) against a list of “rules”. If the rule fails, you have a security defect.

It’s worth diving deeper into rules (also called “checkers”, “test cases” and so on). Rules are the brain of SAST tools. You make a list of all possible security defects that can affect your code and codify them in a way that the rule engine can understand them. Like all security defects, some are specific to technology used (e.g.: using math.random() in Java) while other are specific to the application being tested. To account for both types of defects, most SAST tools have a set of preset rules (usually programming language specific) and provide the consumer the ability to write custom rules (to account for business logic defects).

If the goal of phase 1 is speed and accuracy, the goal of phase 2 is exhaustiveness (preset rules) and robustness (custom rules). Good tools basically do the following:

[Preset] Ensure all known defects have a rule

[Preset] Ensure new rules are added when new defects are discovered

[Custom] Create a easy to use experience to create custom rules

[Custom] Make the custom rule engine powerful enough to cover for defects emerging from complex application logic.

IMO, for large, mature SAST programs, a solid custom rule engine is more important than good presets. We will talk about this more in the next edition.

Side note

The above is a simplification of what rule engines do. In reality, they will need to perform various kinds of analysis to “match” rules. Common techniques include:

Semantic analysis or “glorified grep”. Basically a keyword search for dangerous phrases. Most secret scanning rules are written using semantic analysis (side note to side note: Github is trying something very cool with their secret scanning partner program. Check it out)

Taint analysis. Many attacks result from the way your application treats dangerous input from untrusted sources (“taint”). Taint analysis identifies where taint is introduced (called “source”) and tracks it through the application until an action is taken, such as writing it to DB or displaying back the user. The point at which taint exits the system, is called “sink”. Taint analysis analyzes if tainted input went from source to sink without some kind of security treatment (sanitization, validation, encoding etc.). If it did, the rule engine fails the test and calls it a defect.

I must confess that my mind was blown the first time I learned about taint analysis. What a phenomenally cool and powerful concept! The reality however is that taint analysis is computationally heavy and hence slow. In addition, the efficacy of "security treatment” is hard to evaluate. Hence, taint analysis routinely leads to false positives and false negatives, in-turn leading to a degraded experience and a false sense of security.

I am really hoping for a tool to perform efficient taint analysis. For now, Semgrep’s approach seems promising, but has a long way to go before it can be used across applications (read more here).

Phase 3: Report generation

This phase is straightforward. It takes the output from Phase 2, applies preferences set by the user and generates a report in a meaningful way. From PDFs (useful for audits), to creating bug tracking tickets (useful for developers) to SARIF (used for security teams), modern tools do a really good job at providing a long list of options. Of course, the onus is still on the person/process running the tool to configure it in a meaningful way.

Side note: SARIF

A side effect of the proliferation of static analysis tools is that each tool had its own output format. This made it hard to build downstream systems (e.g.: vulnerability management tools). To solve this problem, the Static Analysis Results Interchange Format (SARIF) format was created. This standard makes sure all relevant details are provided in a predictable format as output from SAST tools. The good news is, most SAST tools now support SARIF. This makes it easier for us to use multiple SAST tools without modifying downstream systems.

Bonus phase: Post-scan

Some SAST tools also allow you to triage results and persist resulting state (e.g,: Mark false positives). Most commercial tools provide an interface where results can be consumed and processed (triage, de-duplication etc.). These features can be helpful, but they are tangential to SAST itself. Defect management is a fascinating topic which warrants further debate, but maybe in a different edition :)

That’s it for today! We are half-way through the SAST primers and will focus on understanding the SAST tool landscape in the next edition. Were there any key details missing from this edition? Are there other aspects of SAST we should focus on? Let me know! You can drop me a line on twitter, LinkedIn or email. If you find this newsletter useful, do share it with a friend, colleague or on your social media feed.