Edition 23: A framework to securely use LLMs in companies - Part 3: Securing ChatGPT and GitHub Copilot

Part 3 of a multi-part series on using LLMs securely within your organization. This post helps you secure two of the most popular LLM-based tools used to boost productivity in the workplace.

In part 2 of the series, we spoke about managing risks emerging from integrating LLM APIs into applications. In this edition, we will talk about a seemingly simpler problem: How do we manage the security risks coming out of two specific, popular tools: ChatGPT (a chatbot powered by OpenAI’s GPT models) and Github Copilot (a pair programming tool that works in popular IDEs)? While both these tools have competition from Google, Amazon, and other smaller players, most companies that we spoke to appear to use these two as the gateway to GenAI usage. This is especially true where the main focus of leveraging GenAI is employee productivity increase.

As Security teams, the simplest LLM Security initiative we can take is to clarify how these popular tools can be used in an approved manner. In other words, of all the complicated LLM security things, this is the lowest-hanging fruit. We suggest you pluck it :)

Much like the first two editions of this series, a lot of what’s written here may be outdated soon. Given Microsoft has a significant role to play in both these products, it's safe to assume that they will roll out features keeping enterprises in mind (and security is a key part of it). This post is a combination of broad principles (how to think about risk) and specific guidelines (configuring Copilot), so YMMV.

Hypothesis

While these tools are proven productivity boosters, in their current avatar, these tools are hard to manage centrally. This means, you can neither centrally configure them to be secure (governance nightmare), nor should you ban them (hurts productivity). While there may be some technical solutions to manage the risks that emerge from their usage (we will explore some of them later), coherent policies and employee awareness are critical to managing these risks.

ChatGPT - Risks, controls, and usage

ChatGPT is arguably the most popular application leveraging LLM. It is also fair to assume that at least a small percentage of your company’s employees are already using it for work (irrespective of what your policy says :)). There is enough literature on the amazing things ChatGPT can do. This section focuses on the risks of insecure usage, what security controls are needed to manage those risks, and how those controls can be applied.

Key risks

Recapping what was mentioned in an earlier post, here are the key risks to a company when employees leverage ChatGPT:

Sensitive data leakage: Employees inadvertently add internal, PII, or other forms of sensitive data to their prompts. This means OpenAI (or plugin developers) may be able to read sensitive company information.

Overreliance on ChatGPT generated output: The way LLMs such as ChatGPT are built, there is no guarantee of “accuracy” of the data output generated. This means that when employees rely on ChatGPT output without human validation, they run the risk of using incorrect “facts” to make critical decisions. A common use case is to use such tools to generate source code. While the tool can help reduce development time, it can lead to using insecure (e.g.: code generated is susceptible to SSRF) or unlicensed code (e.g.: the code generated is from an open source repo. Even outside engineering, using LLM responses to make important decisions at work can lead to unpredictable outcomes.

Security controls

Here are the key security controls that need to be in place for secure usage of ChatGPT:

Prompts should not contain company internal information such as Source Code, Policies, Secrets (API Keys, Passwords, AWS/GCP details, etc.), Employee personal information, etc.

Prompts should not contain customer information such as their PII.

ChatGPT has 500+ plugins published by 3rd party developers. Security teams should publish a list of permitted plugins. Only these plugins should be used. Note that plugins are currently only accessible through the web interface. Plugins cannot be accessed through APIs.

There’s an open question on how Security teams should evaluate plugins. We will tackle this in a separate post.

Any code generated by ChatGPT should be tested by humans or other tools before deployment

Usage methods

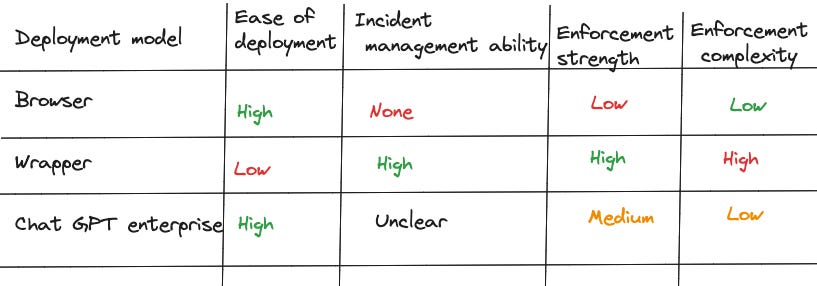

ChatGPT can be used in enterprise in 3 ways. Depending on which method is used, implementing the security controls changes significantly:

Browser/Employee Sign-up: In most companies, ChatGPT is used by employees through a browser. While this is simple to use, it provides no way to enforce any of the above controls using automation. Your only options are to enforce it through policy (e.g.: Publish a “ChatGPT usage policy”) and awareness training (conduct regular employee training). In theory, there is a possibility of using end-point software to monitor ChatGPT usage of employees, but it is safe to say it creates more problems than it solves (e.g.: Privacy concerns).

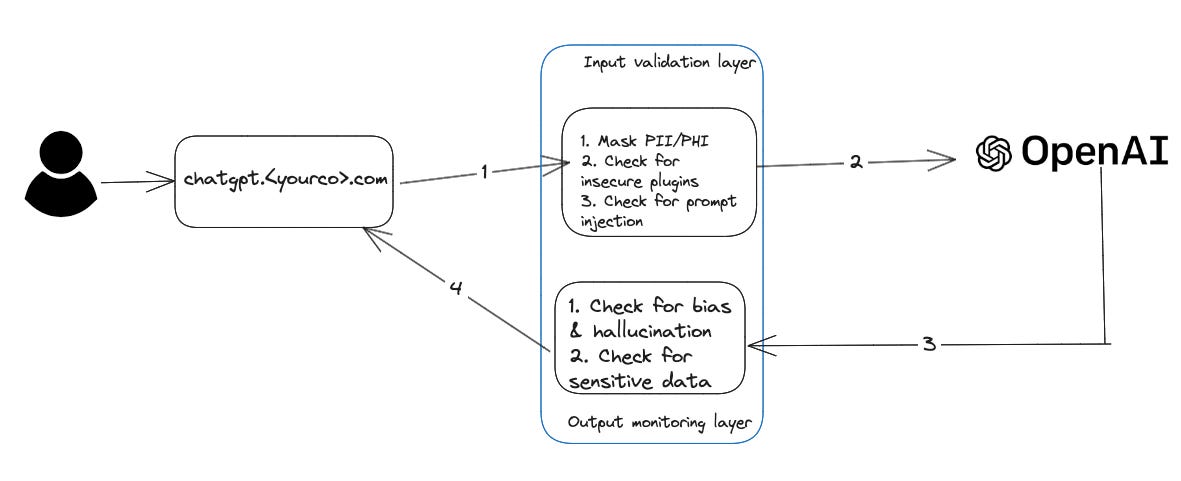

Wrapper: Write a wrapper application that sends requests to OpenAI through a gateway. The gateway validates the prompt and the output to manage data leakage and hallucination risks (This is similar to the solution recommended in “Public SaaS” deployment in Edition 22). You also have greater control over the kind of output that should be blocked through the “Moderations” endpoint provided by OpenAI.

ChatGPT for enterprise: This recently released enterprise version of ChatGPT alleviates concerns about data sharing. It also ensures that prompts used by employees are not used to train ChatGPT. However, there are some open questions about this plan:

OpenAI has not published the pricing for this plan (believed to be a minimum of 100K in spend, but this isn’t official)

Employees can continue to use the regular ChatGPT from their laptops. So, you will still need to enforce controls from the “browser” option

It is unclear if plugins are supported. There is no mention of plugins in the official announcement

They support SSO (#win) and provide an “admin console” that provides usage analytics, which can be helpful. However, given neither author has tested this, we are unsure of the effectiveness or ease of deployment of the console.

Github Copilot - Key risks and security controls

While ChatGPT is useful for all employees at your organization, Github Copilot will mostly be used by software engineering teams. This makes it somewhat simpler to manage risks emerging from the use of Copilot. However, much like ChatGPT, Github Copilot also has minimal org-wide settings. This means all security controls need to be implemented at the developer level. Here are some controls that can help avoid data leakage and over-reliance on LLM output.

Key risks

Sensitive data leakage: When Copilot is enabled, from a risk management perspective, you should assume that some or all of the code will be sent to the Copilot servers. Large production code bases tend to have more than just code. They have data (SQL insert queries, schema diagrams, data dumps, etc.), secrets (API keys in code are quite common), and much more. Sending this data to Copilot servers can be a security and privacy concern. For instance, if you personal information of EU citizens as test data in your code base (say in a CSV file), you run the risk of violating GDPR guidelines by enabling (or not explicitly disabling) Copilot on it.

License violation: We can break this down into 3 parts:

Leaking your company IP to Copilot.

Leaking 3rd party code that you do not have authorization to distribute (e.g.: You use a commercial 3rd party library).

Copilot may recommend code that you do not have the license to use. Given Copilot was trained on a large data set, some of that data may have copyrights, that do not allow you to use it in a commercial setting.

Note: GitHub offers to defend your company if you are accused of copyright infringement for using Copilot code (See FAQ). It’s too early to know if this strategy is good enough to handle license violation lawsuits.

Security controls

It’s disappointing that Copilot does not allow companies more control over how their employees use the tool (there are a couple of usage settings in the GitHub organization settings page, which are inadequate). I fully expect this to change in the coming months. In the meantime, employees using Copilot can use the following settings to prevent accidental leakage of Personally Identifiable Information (PII) and company-specific sensitive information.

Block suggestions from specific extensions: This setting allows you to block suggestions for specific extensions. We recommend leveraging this for extensions that are more susceptible to data and secret leakage: This includes env, tsv, csv, json, xml, yml, yaml, pem, key, ppk, pub, sql, dbsql, sqlite, htpasswd, properties, p12, pfx, conf, etc.

Block suggestions matching public code: This setting will prevent GitHub Copilot from suggesting code that matches public code. This can help to protect sensitive data that may be accidentally included in public code repositories. This setting is on by default. Do not turn it off.

Code review: In addition to the above settings, companies should have a thorough review methodology for code generated by Copilot. While this may be hard to scale, it’s important to have at least one pair of human eyes review code generated by Copilot to avoid detecting license violations or dangerous code. Furthermore, all the security checks applicable to your applications (e.g.: SAST, SCA, container scanning) should not be skipped for Copilot-generated code.

Side note 1: If your company uses standard IDEs (e.g.: VS Code) across the board, there may be a possibility to use endpoint protection tools to force these configurations on engineers.

Side note 2:There is some chatter about the introduction of copilotignore, similar to gitignore (there is no official documentation about this feature). If & when this feature becomes official, enterprises could add this file to all their repositories. This way, the configuration can be managed centrally.

That’s it for today! Are there significant risks that are missed in this post? What other aspects of ChatGPT and Copilot worry you? Are there other LLM-powered tools that are gaining traction among employees that we need to worry about? Let us know! You can drop me (Sandesh) a message on Twitter, LinkedIn, or email. If you find this newsletter useful, share it with a friend, or colleague, or on your social media feed. You can also reach out to our first guest co-author, Ashwath on LinkedIn,