Edition 5: How to consume AppSec advice from the internet?

AppSec advice on the internet often makes me go "that sounds great, but it would never work for me". In this edition, we try to build a framework to analyze what kinds of advice may work for you.

Like most areas of computer science, learning through blogs and videos is quite common in AppSec. There are a few distinct kinds of content I find particularly helpful when I try to solve AppSec problems:

A detailed, tech focused blog on how someone solved a particular problem. Snowflake's blog on custom rules for static analysis and Figma's blog on securing internal apps are great examples.

Conference videos from companies who have solved problems. The signal to noise ratio on AppSec conferences is bad, but some gems always come through. Dropbox's talk on securing internal apps is a really good example. Over the last few years, the quality of cloud security talks have gone through the roof!

I also like reading/watching takes of consultants who repeat their solutions across various clients. These can be mile wide and inch deep, but does a great job in giving you the lay of the land . They can also sometimes get salesy, but that's fine. The good ones do it subtly and to be honest, it's completely OK to sell what you are doing through good content (better than annoying banner ads I guess :) )

Finally, there are companies where "publishing relevant content" is part of the job description . Think analysts like Gartner or organizations like OWASP. Again, the signal to noise ratio here can be poor, but they are really good at putting together a bunch of resources on relevant topics

While these resources are helpful, there is usually a problem of relevance. Often, I read a really good blog and go "this is great, but it would never work for us". This happened so often, that I figured I needed a mental model to understand what kind of AppSec advice and what is not.

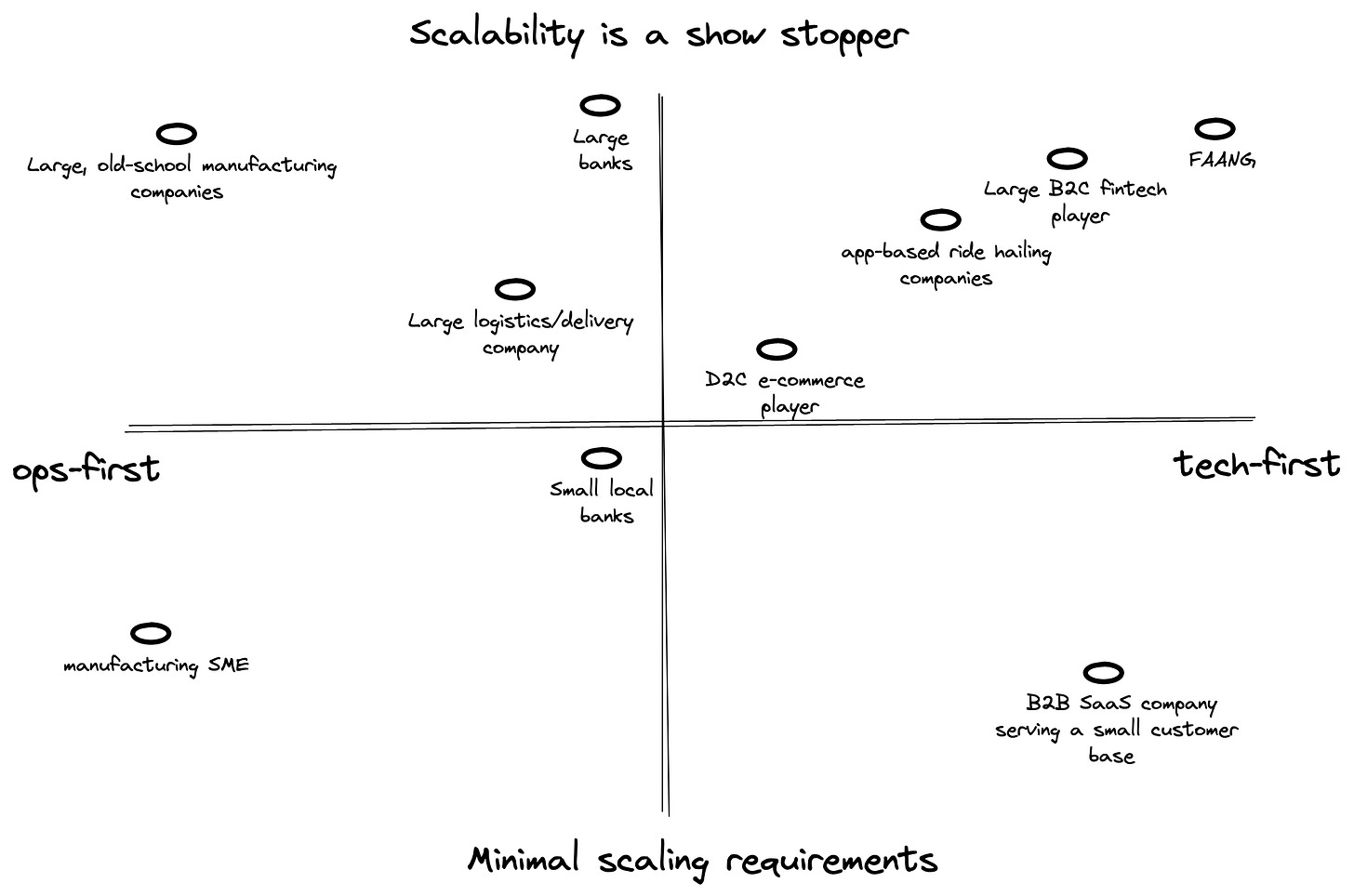

The ScaleTechOps framework for analysing AppSec advice

OK, that’s a terrible name, but hear me out :)

The lightbulb moment came during a conversation with a friend. His insight was that InfoSec in an ops-first company is very different from InfoSec in a tech-first company. This made a lot of sense for AppSec too.

So, if a consultant who mostly works with old school financial services companies (say banks and insurance companies) puts out a piece about building a static analysis problem, the ideas presented may not work for a tech driven, mobile first company building a super-app. An ops-first company will tend to have excellent program management techniques (good business analysts, regular cadence, clarity of roles among stakeholders) to achieve most goals. A tech-first company will use engineering solutions to get to the same objectives (automation, SaaS v/s contractors etc.).

Side note: Based on my experience, It's probably true that most ops-first companies are moving towards becoming tech-first companies. However, there is still enough delta for our usecase.

This distinction still does not help answer the question of scale. A recently launched tech startup and Netflix can both be considered "tech first", but the scale requirements — in terms of amount of software and number of employees — is way higher in Netflix. So, "scale" is the second yardstick I try to use while evaluating if the advice I received may work for me.

So, the framework essentially is:

When you consume AppSec ideas from anyone, figure out which quadrant their ideas are relevant to. If your company is in the same quadrant, the chances of the advice being relevant is much higher, compared to advice coming from someone who is in a different quadrant.

If you are in the business of giving AppSec advice (like I was in my previous job), it maybe useful to understand which quadrant the receiver of the advice is in and modify your advice accordingly (assuming you actually have expertise in that area :) ). The below list of what it means (from an AppSec perspective) to be in each quadrant may help.

Tech-first:

Minimum Viable Products (MVPs) are a great way to show value. Build something small, show it to a few people and see how they react. Build adoption if this goes well. So if you want to start using a new log monitoring tool, deploy it on a few servers in addition to the existing system, gather data on the differences and then present to stakeholders

Don't do anything which does not have a path to automation. It's fine if you want to start in manually, but have a plan for automation. For example: Nothing pisses off tech-first companies like a large manual pen-test program as a key security activity.

Ops-first

Value needs to be shown through analysis . RFPs, case studies and evaluation criteria are key steps before value can be proved. Side note: This is probably why consulting firms are invaluable to ops-first companies

All solution needs to have a solid implementation plan up-first. This does not mean just a spreadsheet, but things like escalation matrix, SLAs, reporting details need to be decided before adoption. Especially important is to define roles and responsibilities. Using tools like a RACI matrix can really help

Scalability is a show stopper

The cost of adopting the wrong tool or solution can be high. Solid evaluation works better than trial and error. So, if you are in the market for a new SAST tool, building a robust evaluation criterion is important (Something like the one we discussed in edition 3)

Think of adoption of new tools/solutions as dials instead of switches. You bought a new shiny RASPIASTDASTASAST tool? roll it out to a subset of users (e.g.: A friendly business unit), document lessons learnt and then roll it out others.

Robust processes (enforced through humans or tech) are important. Individual heroism can get a project started, but won't sustain over time.

Minimal scaling requirements:

Tolerance of failed experiments is usually higher, but bandwidth of each employee is low. Prefer experimentation over evaluation.

Most initiatives are driven by people instead of process. Playing to the person's (say the CTO) strength is important. If she prefers outsourcing over a SaaS platform for pen testing, just go with that.

That's it for today! I would love to hear your thoughts on this framework. Is this too simplistic? Are there other axes we should use instead of scale and tech-first v/s ops-first? Do you think this approach will work the next time you research solutions to an AppSec problem? If yes, drop me a line on twitter, LinkedIn or email. If you find this newsletter useful, do share it with a friend, colleague or on your social media feed.

In house development v/s outsourcing play's a crucial role. Most of the ops-first company relies on offshore development and security is not taken into consideration during contract negotiations. Hence driving security initiatives are tough. Tech-first companies prefer in-house development which provides flexibility for new initiatives and provides organic environment for automation. Also, compliance and regulation plays a big role which sets low bar requirements (for ex - security maturity is bare minimum in medical devices; we need more regulation like GDPR across the globe to change this attitude) in ops-first companies which hinders the motivation. Where as security is a market differentiator in tech-first companies and drives product revenue. This in-turn drives security budget. So, alligning with strategic driving factors of an organization will play a role in the success of AppSec program initiatives.